Happy October!

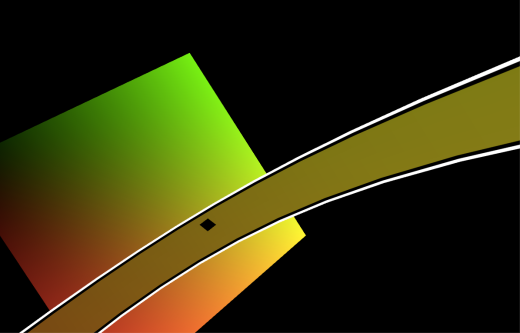

Feast your eyes– It’s Run the Gamut‘s first publicized in-engine screenshot! There’s not much to see just yet, but let’s focus on what is onscreen— there’s some sort of rainbow cube in the background, and what looks like a glowing shoelace in the foreground. That would be the chromatafoil– it’s currently scripted to draw the ribbon in a ring, but before long it’ll flow behind the camera all fancy-like. It has an outline to help provide a depth cue, plus it looks cool.

Every color in the scene is the output of a function that takes each pixel’s world-space position as input. The cube is a stand-in for other color cues that I haven’t iterated on yet.

I’ve learned a lot about materials and meshes in Unity this past week. I come from an OpenGL ES 2.0 background, and there are many differences.

No vertex attributes for you!

There’s no freestanding vertex or index buffer in Unity. A Mesh is an object with an array of position vectors, an array of triangle index integers, an array of texture vectors, et cetera. There’s no way to specify what attributes lie on each vertex; that’s obscured by the engine. In effect, a Mesh vertex can only store ten kinds of information, and in the shader, you’re required to label the inputs, so the engine has enough information to fill in the blanks. It’s a lot of hand-holding.

The advantage, I suppose, is that Unity’s engine can directly expose per-vertex things (like “bone weights”, which a large fraction of games use) to programmers, which accelerates the development of projects that need them. The disadvantage is, if a project specifies sixteen independent values per vertex, the programmer needs to clump unrelated values into irrelevant types to pass them to the shader, which then needs to un-clump them. Technically, programmers who store non-image data in textures already have to un-clump values from samples in their shaders, even in OpenGL ES; the disadvantage is mostly isolated to generating weird meshes… like the chromatafoil’s.

Who runs Rendertown? Master Blaster runs Rendertown.

In OpenGL ES, it’s the programmer’s responsibility to instruct the GPU to draw frames when the time is right. That allows someone in my position to optimize what the GPU draws. For instance, if the chromatafoil’s mesh contains a hundred segments, but only the first twenty have been used, I can tell the GPU to just draw the first twenty segments. As more segments get used, I’d increase the number in the drawing instruction. Furthermore, because I’m only positioning the vertices on the ends of the ribbon every frame, I can also instruct OpenGL to only upload those changes to the GPU, rather than the entire set of vertices.

You probably know where this is going.

A Mesh in Unity has the public properties vertices, triangles and the like. If I change one vertex or triangle, it seems that I have to reassign a complete array of vertices or triangle indices to the mesh. Does Unity bother to optimize the vertex upload, or the draw call? I don’t think it can, efficiently, but who knows.

Still, I can’t complain.

The fact is, despite all this ham-fisted weirdness, I’ve been able to move pretty quickly in Unity. And if I was attempting the optimizations I’ve discussed with less OpenGL ES experience, they probably would have been a real hassle to put together.

I’ve got plenty more work to do before this is even a game, so, time to quit babblin’! Watch this space.